Issue #5: Dimensional Dispatch

Hi everyone!

We're running a week late on Issue #5. Why? Well it seemed to be like everyday, something monumental would happen and I'd go: let's wait one more day so I can put this in the newsletter. But alas, that's just the way the news cycle works so it came time for me to officially make a move on this issue.

I'm sure you all caught Apple's September 12th event last week. Turns out, there's not too much that wasn't already rumored. In fact, we were hoping that the event would bring in a little bit more than what Apple had to give but besides a casual mention of "spatial video" and "memories in 3D" (where have we heard that one before 🤔), we were left with more questions than answers.

Luckily for you (wouldn't have happened if I didn't procrastinate, so you're welcome!), our friends at EveryPoint released an episode of Computer Vision Decoded in a full breakdown of what these new camera upgrades to the iPhone 15 and 15 Pro means for depth maps and spatial video. Watch that in-depth (pun intended) video here.

Apple aside, we're also well aware that Gaussian Splatting is making it's way into everyone's newsfeeds this week. (Bilawal, Jonathan, Manu — I see you!!!) Perhaps it's because of the people I follow, or perhaps it's because it really is the next big thing but with integrations now with Unity and even the web, it's now possible to blend traditional 3D scenes with Gaussian Splats which means that this may be coming to a workflow near you sooner than you think.

However, we are still loyal to NeRFs since that's so far what we know as some of the fastest way to get from 3D capture → Looking Glass. This week, we decided to put our money where our mouth is and publish a video about how to do exactly that. Turns out, it's super simple and all you really need is a phone that can capture video, the Luma app and a Looking Glass. In this video, I walk you through how I took a quick video shot with my iPhone 13 Pro at Galaxy's Edge to NeRF → hologram in just a matter of minutes.

Meanwhile, here are some other things that caught our attention in the last three weeks:

MagicAvatar: multimodal avatar generation and animation

MagicAvatar is a multi-modal framework capable of converting various input modalities — text, video, and audio — into motion signals that subsequently generate/ animate an avatar. You can create avatars from simple prompts: "a group of k-pop stars dancing in a volcano" is one of my favorites, or, given a source, be able to create avatars that follow the given motion (with added prompts). Just look at these results!

Refik Anadol x Sphere

Most well known for his work at MoMA, Turkish new media artists Refik Anadol will soon be presenting his work at the premiere of the Sphere in Las Vegas (yeah, remember that?) Anadol will be debuting a hallucinatory algorithmic installation specially made for the sphere using images sourced from satellites, NASA's Hubble telescope, as well as publicly sourced images of plantlife around the world.

“This media embedded into architecture has been my vision now for so many years, since I watched Blade Runner at 8 years old,” Anadol mused in his interview with Los Angeles Times. “Now I’m an artist visualizing the winds of Vegas and transforming the world’s largest screen into sculpture. I’m able to dream.”

Apple TV+'s upcoming Godzilla universe reportedly partly filmed in 3D for Vision Pro

This is a little late (first reported in Mid August at 9to5Mac) but the upcoming Godzilla universe TV series is rumored to have been partially filmed in Apple's Immersive Video format, primed for a spatial 3D playback experience on the upcoming Apple Vision Pro headset. It's obvious that with it's own production capabilities, Apple is able to drive forward content production in native 3D, but while they have not officially confirmed the use of 3D camera in production, it is believed that they are working on an array of new content for the Vision Pro headset with it's new proprietary video formation. As you know, we make 3D displays so any content in the form of 3D is welcome. If someone has more insight or dish on this, feel free to hit reply and share the juicy details.

Agents - a short film about AI agents

Speaking of films, the team at Liquid City collaborated with Niantic to imagine what it would be like to live in a future with AI x XR agents. This short film was directed by Keiichi Matsuda, an independent designer / director who worked on previous imagined concept film short, Hyper Reality.

What is going on at Unity?

We're still waiting to see what the light is at the end of the tunnel after this rollercoaster of a week of announcements at Unity. Last Tuesday, Unity announced a controversial new fee structure that turned the 3D development world upside down, accompanied with many developers and game studios immediately looking for ways to port their games to Unreal and Godot. While it seems like this news took a lot of people by surprise (including some members of our community who work at Unity in developer relations!), early this week, Unity made some updates to their runtime fee (in this updated structure here) and also issued an apology for this oversight. What's next? We're not sure. But we are certainly paying close attention to how this might resolve.

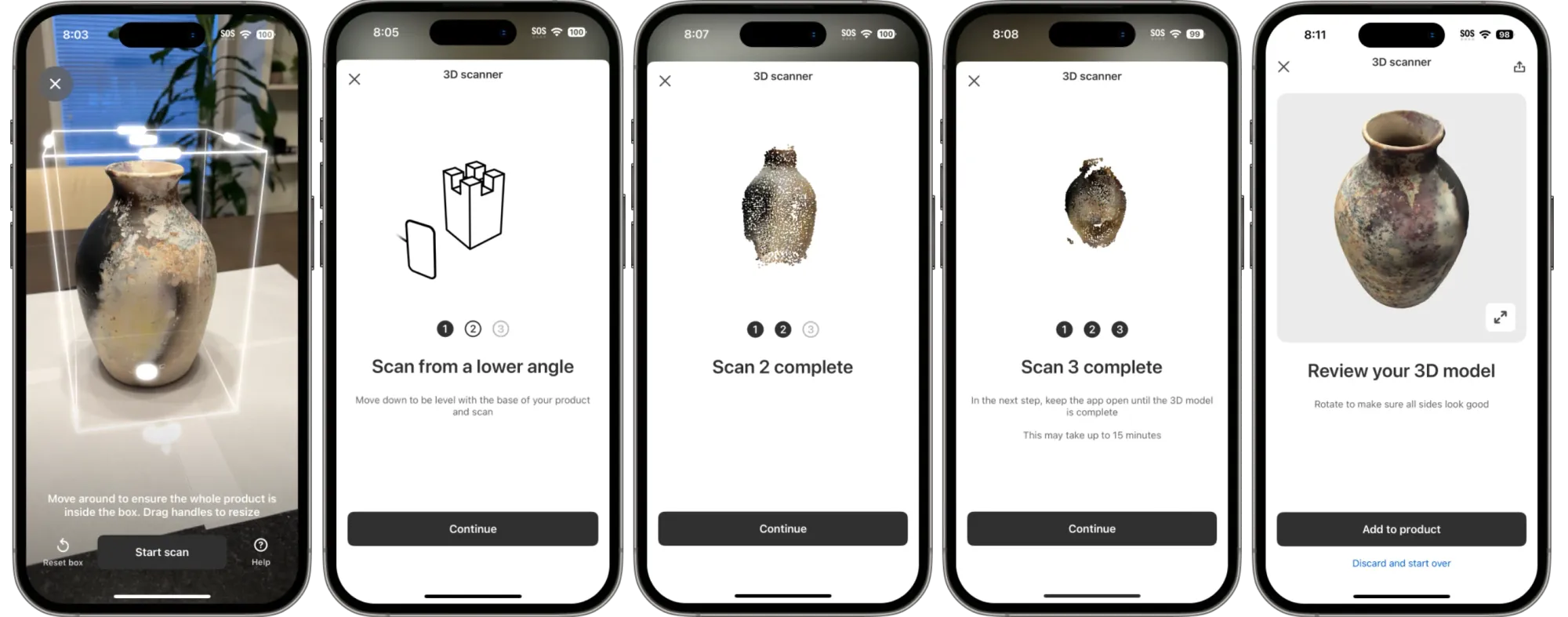

Shopping in the third dimension

In what appears to be a transformative step in the right direction, Harley Finkelstein, Shopify's President, casually announced on LinkedIn two days ago that it is now possible for all Shopify merchants to create 3D models directly using the Shopify App. There's a quick tutorial on how to get started here though it's not entirely clear how this is different from using an app like Polycam / Scaniverse (assuming we're using photogrammetry techniques here) though given that the 3D scanning processing is happening natively in the Shopify app, I can see this trending in the right direction for bringing 3D product photos into our browsers - and soon, perhaps Looking Glass displays will just be the 3D shopping window you never thought you needed.

This announcement follows shortly after Amazon came up with updates around visual and AR search earlier last week. The AR feature is now upgraded to support viewing tabletop items (like coffee machines) and move things around your space.

Generative Image Dynamics

A new paper out by Google Research shows that it's possible to simulate the response of objective dynamics to an interactive scene to result in a more dynamic looping video all from the input of a single scene. The model is trained using a frequency-coordinated diffusion sampling process to predict a per-pixel long-term motion representation in the Fourier domain. These folks call it "a neural stochastic motion texture" but we like to refer to this as simple: magic. You can read more about the research here. They have some pretty neat interactive examples that you can tug and pull at too!

Quick links

- Venice Film Festival 2023 Is Cinematic AI’s Coming Out Party via Charlie Fink at Forbes

- Nintendo rumored to be working with Google on a VR headset via Mixed News

- How to View 3D Gaussian Splatting Scenes in Unity via Jonathan Stephens

- Roblox’s new AI chatbot will help you build virtual worlds via The Verge

- TikTok introduces a way to label AI-generated content via The Verge

- Introduction to 3D Gaussian Splatting via Hugging Face

Before you go...

That's all for this week, folks!

A reminder that we are coming at you hard and fast with the latest and greatest 3D and AI news of the season and we'd love it if you told a friend or two about it.

Who are we? We're the makers behind holographic displays known as Looking Glass Portrait (as well as some bigger displays too). Check them out here.