Issue #4: Dimensional Dispatch

The smell of fall is in the air.

And it's smelling pretty three-dimensional.

Before we get into the 3D and AI news of the fortnight, I wanted to start with some pretty exciting news of our own. Just one week ago, we announced the launch of our very own Image to Hologram service on our Blocks platform. Since then, we've seen rapid acceleration of new users to our platform and more daily holograms uploaded than ever before. If you haven't given it a spin yet, I highly recommend trying it out for yourself. Upload an image - any image - and see it immediately in full parallax on your browser. Or, even better, if you have a Looking Glass - cast the hologram directly to your Looking Glass. Try out Blocks for yourself and convert your first image here.

For the full deep-dive into what it is exactly we launched, learn more in this blog post here.

Alongside that, I'm also excited to share this brand new video essay about depth maps by none other than our resident Creative Technologist, Missy. Have you ever wondered how computers... see? This is a first in a new series of videos that we're working on to help break down some of the core concepts of some of the technology behind our technology. We hope you enjoy it and as always, you can help us as always by sharing this blog post, the video, as well as liking and subscribing to us. We appreciate you!

Now onto this week's top stories.

Gaussian Splatting

We loosely covered the emergence of Gaussian Splating in our last issue but there have been a flurry of new research, tutorials and - well - splats, that have come out since. As we predicted, some of the most informative content has come from Jonathan Stephens with him releasing a full on 40-minute beginner's guide (I've been waiting for this one) that takes you from 0 to making your own Gaussian Splat in no time. I highly recommend starting here if you spent the last two weeks thinking:

"This is so cool, but how the heck do I make one?"

On the same splat, there are a few folks out there working on porting the Gaussian Splatting paper to the web (and you know we love the web). Techniques vary from using a local server and sending the results over the web all the way to running the whole process in WebGL or WebGPU.

- This is Michał Tyszkiewicz's web viewer for Gaussian splatting NeRFS with client-side interactive rendering of NeRFs.

- Jakub Červený is working on a WebGL rendered for Gaussian Splatting with some of his findings here.

- Dylan Ebert is also working on a web demo that uses socket-based js frontend and a python backend here.

It is clear that a lot of web-based Guassian Splatting demos are limited by the sheer computational power required in some of these outputs and I'm confident we'll see improvements on this front in the coming months. If not, weeks.

Hugues Bruyère from the community has been publishing some of the best Gaussian Splats (is this what we call them now?) out there. You can follow him here. I especially love the ones where he uses a magnifying glass to amplify some of the details on his captures. Reminds me a lot of what we do with light field photo sets to help amplify the details of capture.

Text → 3D Models get materials

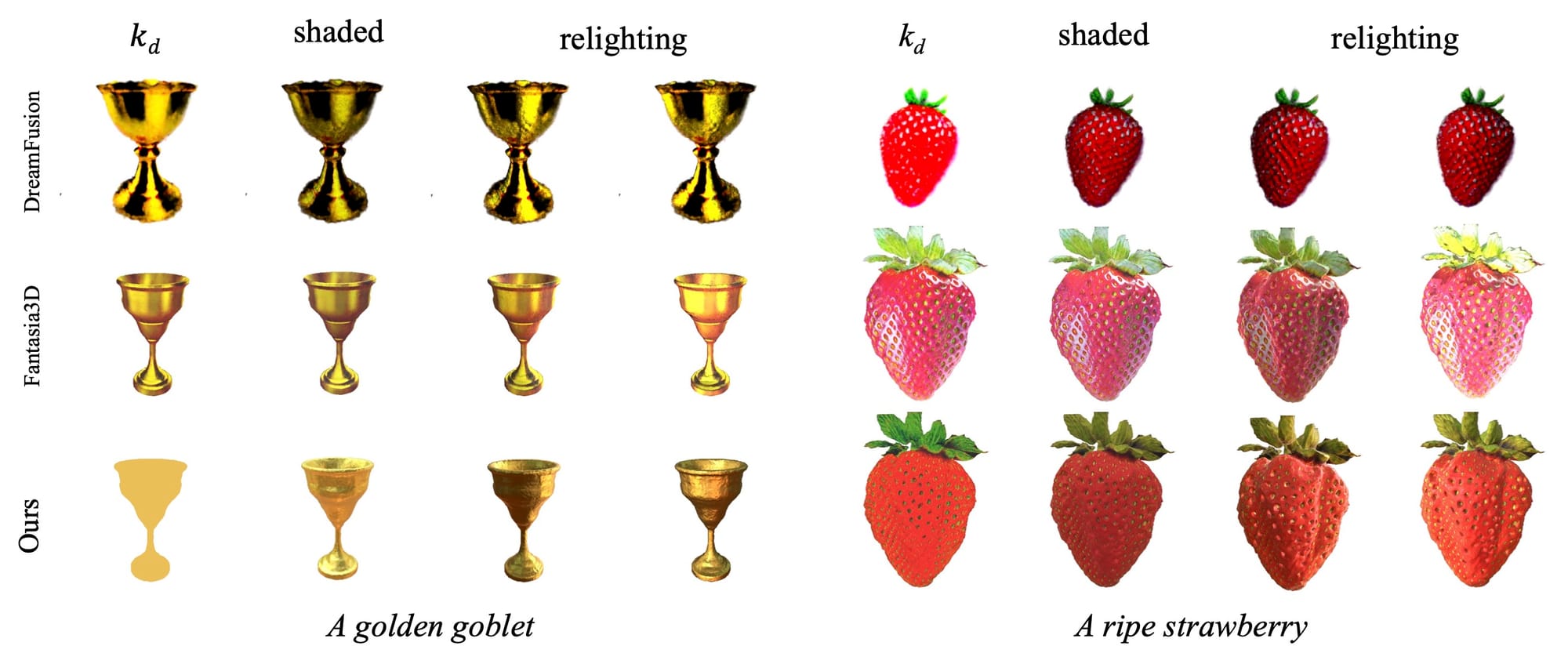

A new paper called MATLABER from the Shanghai AI Laboratory 2S-Lab and Nanyang Technological University improves text to 3D models by adding in materials to the equation. Until now, text-to-3D generation has struggled to recover high fidelity object materials. This paper leverages a novel latent BRDF auto-encoder for material generation and allows objects created with the model to reflect light, have proper metallic reflections and other characteristics we expect from 3D models.

Midjourney introduces inpainting

While it seems like Grimes has had this feature for well over a month now, normal plebians like us now have access to in-painting on Midjourney. This new feature allows you to edit any area of your Midjourney generations with some simple canvas editing tools. Javi Lopez has a quick and thorough video on X about how to use this new feature here.

Simon says: AI art isn't copyrightable

A landmark decision by a federal judge on August 18th upheld that a piece of art created by AI is not open to protection. Intellectual property law has long said that copyrights are "only granted to works created by humans" and that doesn't look like it's changing anytime soon. This ruling turned down Stephen Thaler's bid challenging the government's position. The opinion stressed that "Human authorship is a bedrock requirement." The ruling - interestingly enough - did reference photography: that while camera generated a mechanical reproduction of a scene, [she] explained that they only do so after a human develops a "mental conception" of the photo - a product of decisions like deciding whether the subject stands, arrangements and lighting, among other human choices.

I am reminded of this essay by Stephan Ango titled "A camera for ideas" a year ago that likens generative AI to "a revolutionary new kind of camera" where instead of turning light into pictures, it turns ideas into pictures - the only difference being that the camera is bound by the limits of reality, and derivative work by generative AI is not.

What do you think? Should AI art be copyrightable? Let us know what you think!

--

That just about wraps up this fourth issue of Dimensional Dispatch! I wanted to share some creators out there who have since written about their experience with our Image to Hologram Conversion service:

- Heather Cooper of Visually AI — Beyond Pixels: The 3D Revolution and the Magic of Holograms

- Jim the AI Whisperer — How to make 3D images with AI art that will grab readers’ attention

- Botanical Biohacker — Is My Cat Killing Me?

And last but not least, join us on our Discord this week and enter this week's community challenge: Alien Planet. The rules are simple, show us what life could be like on another planet. But as a hologram.

We can't wait to see you and your holograms on the internet soon. Try out Blocks for yourself and convert your first image here.

-Nikki