Creator Feature: Exploring New Dimensions with Michael Rubloff and Radiance Fields

Michael Rubloff is pushing the boundaries of what’s possible with 3D imaging, bringing us closer to a world where 2D photography is just a memory. As the founder of Radiancefields.com and an innovator in Radiance Field technology, Michael is cataloging the evolution of 3D mediums, such as Neural Radiance Fields (NeRFs) and Gaussian Splatting, with the goal of re-imagining how we see and capture the world. In this creator feature, Michael shares his journey, insights into his creative process, and how Looking Glass Displays have transformed the way he showcases his work.

p.s. if you want to learn more about real world capture and creating holograms from the world around you for Looking Glass displays, check out our recent article Capturing Real World Holograms for Looking Glass Displays.

Q: Introduce yourself! Who are you, what’s your background and what inspires you?

Hi! Thank you so much for having me. My name is Michael Rubloff, and I am the founder and managing editor of Radiancefields.com. My background is in general entrepreneurship, and I get really inspired by re-examining how work is done. I was the first employee of the company Mate Fertility, which I helped start in 2020. Starting in 2022, I discovered Radiance Field based technology, and it was so compelling that I began spending more and more time on it until I felt like I couldn’t imagine doing anything else!

Q: Briefly describe your work and the primary themes you explore through your work.

Radiance Field methods take a series of standard 2D images as input and are able to create hyperreal 3D representations! Right now the main types of Radiance Fields are Neural Radiance Fields (NeRFs), Gaussian Splatting (3DGS), and Gaussian Ray-Tracing (3DGRT), but I think it’s likely that more representations will continue to emerge.

My work is to catalog the progression of these technologies in an attempt to re-imagine the dominant imaging medium of 2D. I believe that it is not only inevitable to move all imaging into 3D, but that the foundations are already in place to do so. Now, my goal is to try to help close the delta between the present and when photography is no longer the dominant imaging medium on Earth.

Here's me on a Bench:

Q: How did you get into 3D and what would you say motivated you to start creating in this field?

I started experimenting with 3D through LiDAR. During the pandemic, I was stuck inside like everyone else, when I discovered the scanner on my iPhone 12. I always loved photography, but I found myself questioning if 2D was the final frontier of imaging. I began wondering what it would take to make hyperrealistic 3D “photos” of my life.

I started experimenting right away under the pseudonym of the “Lidartist,” while building the healthcare business, but objectively my results were terrible! That said, I was relentless with making my friends and family pose while I captured them, in an attempt to get a clean capture. I did this for about two years until one day I stumbled upon an early NeRF from NVIDIA’s Instant NGP. I remember this quite clearly as I had a meeting about LiDAR that afternoon and, when I showed up, I was no longer speaking about LiDAR.

I didn’t own a PC at the time, but I felt so strongly that I needed to understand NeRFs, so I was able to convince my friend to lend me his computer for a week. Unfortunately for him, this turned into a 10-month loan. It took me a solid six hours of staring at the command line to just install Instant NGP, but I felt the upside was too great to give up and I’m so glad I didn’t.

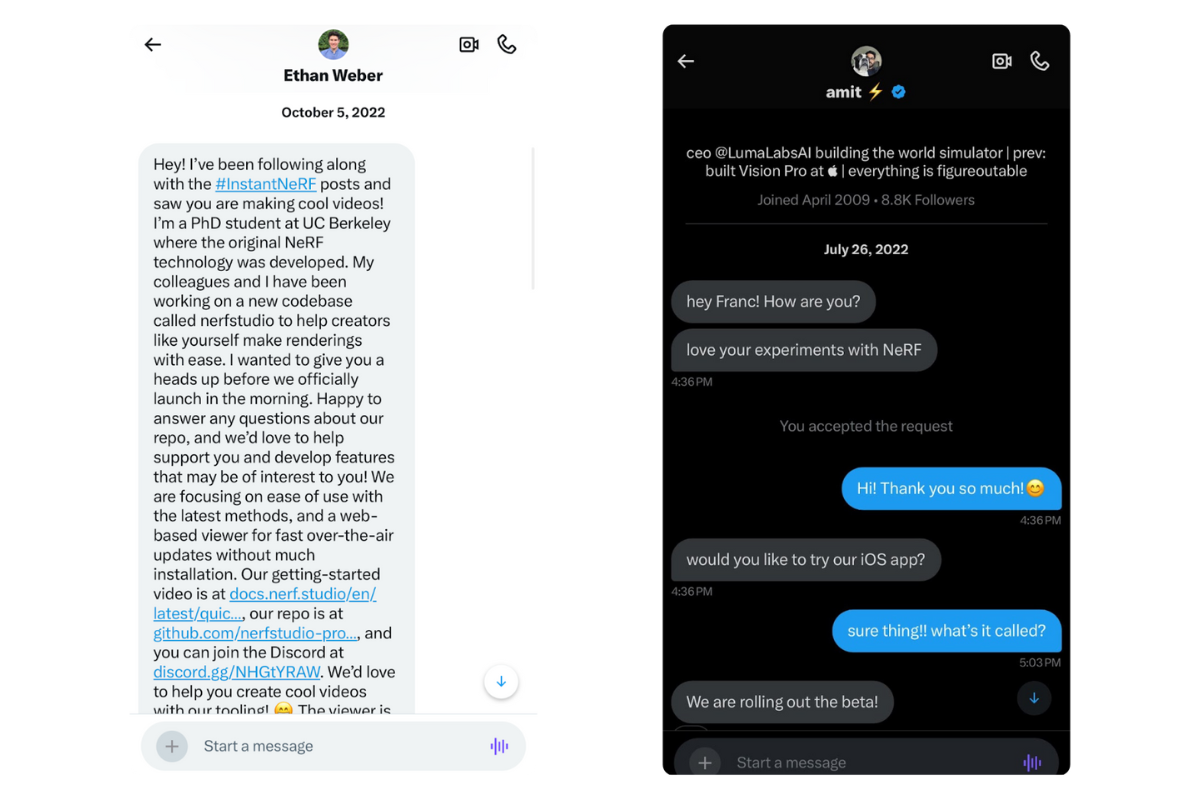

After about six months and about 500 experiments, I got a random DM from someone named Ethan Weber, to let me know about a new platform he, Matt Tancik, and Evonne Ng had created called Nerfstudio. I was so excited to try a new platform and it began to open my eyes to additional radiance field methods outside of Instant NGP.

However, I was not very familiar with the command line interface. That’s why I was even more excited when I got a DM from Amit Jain about a company that was in pre-alpha that he and Alex Yu were creating, named Luma AI (I have a pseudonym under the name Franc Lucent). Luma has done so much for the radiance field community to lower the barrier to entry. While they’re now squarely focused on building the Dream Machine, I am deeply appreciative of the Luma team!

A moment from Amsterdam on Luma:

What motivates me is that it is not only possible to evolve photography, but that it’s quite easy to capture and do reconstructions. For the first year of experimentation, I kept waiting for someone to pop up and say my opinion, but they never came. I started to get frustrated by seeing the incredible things people were creating, in addition to the amazing progress of research and so I decided to begin reaching out to professional journalists. Every single one of them ghosted me, so I decided to just do it myself. This led me to start the website, neuralradiancefields.io, which today is radiancefields.com.

At SIGGRAPH 2023, myself and a small group of people were able to convince a venue in downtown LA to host an event where I had curated a list of roughly 20 creators including myself, Paul Trillo, GradeEterna, Simon Green, and more. We also hosted a panel to talk about NeRFs with myself, Wren from Corridor Digital, Paul Trillo, Fernando, the CEO of Volinga AI, and James Perlman. The name of the event was Points for Clouds, Dreams Aroused. We ended up having 1,100 people attending, which was more than expected, and I was so happy that people got to see NeRFs on display. It’s been a goal of mine to have radiance fields shown in MoMa, but I haven’t been able to execute on that yet. This is something that would be a perfect fit with Looking Glass Spatial Displays!

At the actual SIGGRAPH conference, NVIDIA had asked me to join their panel with Thomas Mueller, the author behind Instant NGP and the person who started all of this for me. Getting to meet him has been the highlight of my career thus far. In addition, I was also able to have an audience with Jensen [Huang, CEO of NVIDIA] to discuss Radiance Fields. I’ve been fortunate enough to continue to work with the NVIDIA team, giving two talks, being a panel guest, and speaking on the NVIDIA AI Podcast at GTC this year.

I have been consulting for Shutterstock for the last year, pushing Radiance Field's adoption in the industry forward. If you’re interested in learning more, let’s talk! To continue to see people discover and embrace Radiance Fields across a variety of industries has been extremely motivating for me.

Q: How did you first learn about Looking Glass?

Looking Glass first popped onto my radar when they first ported over a Luma capture onto the Portrait device. I had spent so much time on the capturing and training portion of Radiance Fields, that I had neglected searching for hardware to view captures on.

I was a little nervous at first trying it out and seeing how captures would translate onto the screen, but Looking Glass exceeded my expectations. It’s gratifying to experience the work as intended, without requiring glasses or a headset.

Q: How has the Looking Glass display influenced or changed your creative process?

Naturally, as technology begins to depart from 2D, having proper hardware to distribute and interact with this medium is critical. One thing that I’m really excited about 3D as a medium is that composition no longer is a creative bottleneck. People have the freedom to explore the composition that is most meaningful and unique to them. I try to keep this in mind as I capture. How can I create scenes that can bring different meanings for people to discover?

Q: If you’ve made anything for Looking Glass, what has been your favorite project or piece to create with the Looking Glass, and why?

I have an entire folder of photos of my dog, which I have cycling on my desk. But I also load in my captures from Luma AI onto both my Looking Glass Go and Portrait. Specifically, I have hundreds of captures of my friends and family. It’s important to me that they are captured in 3D and I’m glad that these hyper-real 3D portraits will exist forever.

Pssst: You can se an entire playlist full of Frankie's fluffy cuteness here.

Q: If you’ve shown your work in a Looking Glass publicly, how do viewers typically respond to your work in holographic form? Is there a memorable reaction you could share?

Now that I have my Go, I carry it around often in my backpack and people tend to be quite surprised by it (the case is super helpful!). Typically people hold it up really close to their face to try and understand how exactly it works. For a lot of people it appears to be science fiction. With the Go, compared to the Portrait, I think people are shocked by how slim the screen is, despite its success of displaying a 3D image.

Q: For creators new to this medium, how would you describe your experience with the technical aspects of creating for the Looking Glass?

I know first hand for people coming into this space it can be intimidating to work within a new medium or explore possibilities, but I promise it isn’t difficult! Like anything, capturing takes a bit of practice. Trial and error will be your best friend here and when it clicks, it’s a great feeling!

Q: How has the Looking Glass community contributed to your experience as a creator?

The Looking Glass team has been super supportive of the community, hosting events and tangibly showcasing how 3D can be interacted with in the real world. I don’t have the deepest technical background, so I’ve needed to ask for help on occasion and there’s always someone willing to help. It’s also been super helpful to see what works well and get some inspiration for what to create or explore.

(Plus! Getting started with 3D has never been easier! If you're brand new and just starting out, Looking Glass Go is perfect for you! Bonus - All Go units now come with a free photo frame to make those holograms shine! If you have a bit of know-how with 3D and are looking to take things to the next level, Looking Glass 16'' Spatial Display is just what you're looking for!)

Q: What advice would you give someone who's intrigued by this medium but hasn't taken the leap to try it yet?

I would say just open your camera app in your home and try capturing something really basic! Don’t try to do a full room first. Try finding an individual object. Getting started is always the hardest part, but it’s the best way to learn. Shutter speed is by far the most important camera setting when you capture.

If you’re intrigued, but unsure, you can always DM me too and I will respond!

Q: What does a day in the life of Michael/ Radiance Fields look like?

My typical day is pretty normal! Right now, I have three main buckets in which I spend my time. The first one is discovering, reading, writing, and understanding new research papers and content. I also check Github and Arxiv.

My content schedule for the most part has about a week lead time, however, given how quickly this technology advances, there are several times a month where something releases that must be covered immediately. Thankfully, people have begun sending me announcements in advance of their release. It’s always massively helpful for me to have a heads up whenever possible for these announcements and is truly appreciated!

After I have written an article or posted on social media, I spend a majority of my time consulting for businesses that are looking to implement or understand Radiance Field technologies. If you’re curious to learn more, reach out and let’s work together!

I also spend quite a lot of time either DMing with or speaking on the phone with researchers and engineers about their work, what could be possible, and trying to learn more about pipelines for radiance field reconstructions.

On Thursdays, I co-host a live podcast called View Dependent, with MrNeRF. Depending on the day, I set aside time to prepare, which can entail rereading papers, collecting media, and questions for the interview. This is something I still need to get much better at.

My website is also built on Framer and so I spend a lot of time hunting through the Framer forums or examining other websites that I admire for their design choices. It’s really fun for me and I end up spending too much time on it, so I do this only after typical working hours.

Q: What are some other 3D tools (software/hardware) that are in your toolset? If you can, share a picture of your workspace with a Looking Glass display on it with some of your work.

A lot of people ask me what platforms I use for my reconstructions. I try to stay up to date with all of them, but most often I use Luma, Postshot, Nerfstudio, Scaniverse, Polycam, Kiri Engine, and SuperSplat. I’d really like to get a headset one day, but it’s not in my budget right now.

Q: Lastly, what’s your outlook on the future of this space – we welcome any crazy predictions :)

My predictions are a bit out there, but I think it’s possible that televisions feel anachronistic within the next 15 years. I think radiance fields will train instantaneously, extending up to IMAX level reconstructions and with real time rendering rates.

I also think that the Radiance Field scale will be massive, as in the size of countries, but this has already been proven to be possible by just using more GPUs. So far the largest Radiance Field that I have seen is 25km, trained across 260,000 images.

I am also hopeful that we will see the shift into 3D and that 2D images will have their place in history, kind of like paintings! I think it would be hard to argue that this shift will not occur in our lifetime.

Whether this advancement remains within Radiance Fields, or an adjacent tech emerges, I think we will get there in the next 20 years, hopefully more and more engineers continue to work on this problem, so that it occurs faster.

Radiance Fields can also be dynamic, such as four dimensional videos. I am hopeful in the next three years we will see the introduction of dynamic Radiance Fields in the form of avatars, similar to Meta’s Codec Avatars (they are powered by Radiance Fields) or Apple’s Personas. Bring on hyper-real 4D content!

Huge thank you to Michael for taking the time to share his incredible insights; We hope you're enjoying our creator features!

Are you a creator working with Looking Glass and want to share your work with us? Write to us at future@lookingglassfactory.com or send us a DM on our Instagram.

We want to shine a light on all the amazing ways creators are using holography to break new ground in the world of digital art!

To the future!

One more Frankie Hologram for good measure 🐶