The Memory Machines

2020 will be remembered as the year that volumetric & light field recording technologies became deeply integrated with holographic displays. This combination will redefine how we relive our memories and connect with one another for decades to come.

About a year and a half ago, I hurriedly wrote this note to a few members of the Looking Glass team:

“I’d like to make that volumetric or light field recording rig we’ve been talking about for a while. I want this for a personal reason and it is time sensitive. My brother Ryan is dying of pancreatic cancer and I want him to be able to leave a message to his newborn daughter that feels more real than anything anyone has ever recorded before.”

Unfortunately turns out pancreatic cancer is one of the only forces faster than technological progress and we didn’t manage to pull this off before my brother passed away*.

But a lot can change in a year and a half. Due to the breakneck pace of development of both capture and playback technologies in that time, 2020 will be the first year that volumetric and light field recording technologies deeply integrate with holographic displays like the Looking Glass. This combination of capture+display will lay the foundation for the next century of how we relive our most cherished memories and, soon, how we most deeply communicate with one other around the world.

This post is a brief guide on the capabilities and limitations of each of these real world capture technologies, how they pair to holographic displays like the Looking Glass, and how both businesses and individuals can apply these technologies today.

There are four main approaches to high-fidelity three-dimensional capture of the real world that are suitable for playback in light field aka holographic displays, each of which has its strengths and weaknesses. The four approaches I’ll cover here are large-scale volumetric video capture studios, portable/modular depth camera capture, light field capture, and depth capture from phones.

1. Large-scale Volumetric Capture Studios

There are a few dozen volumetric video capture studios operating around the world that work by pointing dozens to hundreds of cameras at a group of people on vast stages. The video feeds from those cameras are then sent to server banks where the imagery is stitched and transformed into three-dimensional meshes (e.g., 3D models) of the captured scenes.

A significant benefit of this type of capture is that resulting volumetric video is cross-platform, playable in AR or VR headsets as well as holographic displays via Unity or Unreal plugins. This also means the content can be modified with visual effects and added to synthetic environments with almost the same ease that a CG artist would find working with virtual characters.

There are — surprise — downsides to this approach however. Capturing and processing the data at these large studios is costly from a time and financial standpoint, running between thousands to tens of thousands of dollars per minute of processed volumetric video depending on the quality and scale. Also, volumetric video capture as a class does not handle fine details like hair and optical effects like specularity and refraction well if at all. This can have the tendency to make people captured in this way sometimes feel a bit more like video game characters rather than real people.

That being said, volumetric video from these studios is one of the most prevalent types of “holographic” content in the world right now and, due to its ability to run cross-platform, continues to expand its reach.

Large-scale Volumetric Capture Studios: Intel Studios, 4D Views, Mantis Vision, Volograms, Microsoft Mixed Reality Capture Studios. Note that some of these companies also offer smaller-scale portable and modular studios. Comprehensive list here.

How to get volumetric video from large-scale studios into the Looking Glass: The volumetric video pictured above was ported into the Looking Glass with the HoloPlay Unity SDK.

2. Portable/Modular Depth Camera Capture

Just as 2D film first started in large studios and then moved into the hands of vastly more artists as video cameras became portable and more affordable, such is the path being cut with volumetric video capture.

Relatively low-cost and capable depth cameras like the new Azure Kinect are being combined with powerful software toolsets like Depthkit to open up volumetric filmmaking to a wide range of developers and artists, with a resulting quality that approaches and sometimes exceeds that of the large volumetric video studios.

This nimble and modular approach to volumetric video capture can be scaled up or down for productions of different size or duration and is significantly more flexible than booking a large-scale studio. Because of these advantages, we use this approach often in our own Looking Glass labs.

That being said, the more nimble volumetric video capture approach is still subject to the same fundamental fidelity limitations as discussed with the large volumetric video capture studios: volumetric video as a class can’t capture details like hair and misses out on key optical effects like specularity. This limitation is balanced by the relative affordability of the cameras and software toolsets, their portability, and the flexibility from a visual effects standpoint of the resulting captures.

How to get depth camera capture into the Looking Glass: There are two paths.

- Depthkit + Unity: The primary workflow for getting volumetric video recorded with depth cameras like the Azure Kinect into holographic displays like the Looking Glass up to this point has been to use Depthkit with Unity. This path still offers the deepest ability to edit and refine the volumetric video content.

- Depth Media Player + Depthkit: That being said, most filmmakers don’t use Unity. With that in mind and to increase access to the power of volumetric video in holographic displays, in January 2020 Looking Glass is releasing a new tool called Depth Media Player. When recordings are made with Depthkit, those volumetric videos can be played back in Depth Media Player in any Looking Glass directly for the very first time, just as regular 2D video would be, without using a game engine like Unity.

3. Light Field Capture

The highest fidelity way to capture the real world in practice today is light field capture, full-stop. It’s also the least well-known and most difficult to find off-the-shelf tools for.

That’s because light field displays, more colloquially referred to as holographic displays, just hit the market at scale about a year ago. Only with their arrival did this new workflow of light field capture direct to light field display emerge. I believe this is a critical step to achieving the perfect reproduction of life in holographic form.

That’s because the light-field-in-light-field-out approach is fundamentally different than volumetric video capture. In the case of light field capture, the intensity, color, and directionality of rays of light from a real world scene are captured and then replayed, completely bypassing the middle step of conversion to a 3D mesh required for volumetric video. This results in a fidelity level that simply isn’t achievable with volumetric video capture.

Light field captures can be static, as in the example above, or recorded and played back at 30fps+ for light field video. Looking Glass co-founder and CTO Alex Hornstein has been experimenting extensively in this area over the past couple years, most recently on expeditions with National Geographic and other explorers looking to capture nearly inaccessible places and endangered species around the globe, the goal being to bring these places and species to a wider audience with unparalleled realism.

As mentioned above, light field capture isn’t nearly as widespread as volumetric video capture yet — but that’s rapidly changing. Light field capture rigs have started to spring up at conferences around the world, startups are building new systems everyday, and many of the big tech companies are getting into the game as well: Google put together a major initiative exploring light field capture tech last year.

Who’s experimenting with light field capture and playback: Visby, Google, Looking Glass test rigs

How to get light field captures into a Looking Glass: Use the Looking Glass Lightfield Photo App. Light field video player in beta.

4. Depth from Phones

If you’ve read the above and are feeling left out of the paradigm shift from flat media to a holographic future, this last section is intended to deal you back in. Because believe it or not, you probably have a depth camera with you right now. Millions of people do.

Many modern phones like the iPhone 7 Plus, 8 Plus, X, and 11 and a number of Android phones like the Pixel 4 have depth cameras built in — primarily marketed for their ability to take Portrait Mode photos with depth-of-field effects but giving them the latent ability to capture real world depth suitable for holographic displays.

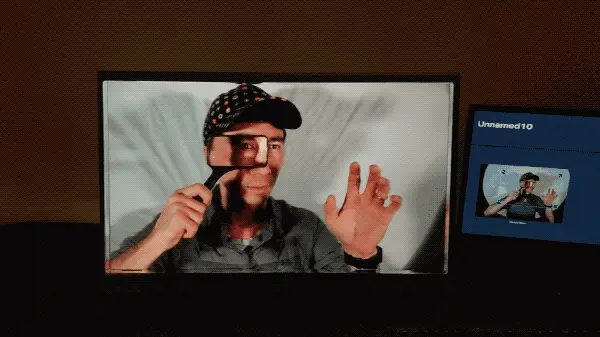

Holographic family photo of me, my kids, and my mom taken with an iPhone X and beamed straight into a 8.9" Looking Glass. Depth image of same photo on the right.

Depth capture with phones is instantaneous, convenient, and pretty damn fun. It’s not going to get you the same level of quality as the the other three approaches described above, and fundamentally it is a single camera approach that will result in gaps in the resulting holographic reproduction — but it’s surprisingly good and points to a future not far away in which all of our memories are captured and played back with real depth.

How to get depth camera capture into the Looking Glass: The new iOS capture app Moments 3D for the Looking Glass is coming to a download link near you January 2020. In the meantime, tutorial for capturing good depth images here. Docs coming soon.

So there you have it. The real world is coming to a holographic display near you. Not 100 years from now, not 10 years from now. Next year — 2020 — is the year the long-awaited sci-fi dream of recording and playing back holographic memories becomes real. To the future!

Note: I’ll be updating this post over the coming few weeks as we add new download links for the toolsets mentioned above — so check back often.

— — — —

Inspired by movies in the 80’s and 90’s, the author Shawn Frayne has been reaching towards the dream of the hologram for over 20 years. Shawn got his start with a classic laser interference pattern holographic studio he built in high school, followed by training in advanced holographic film techniques under holography pioneer Steve Benton at MIT. Shawn currently works between Brooklyn and Hong Kong where he serves as co-founder and CEO of Looking Glass Factory.

*I’m dedicating this post about the memories of the future to my brother Ryan: inventor extraordinaire and long time confidant who I could always share the craziest ideas with late into the night. Miss you brother.