Powering Holographic Media with the Web

Introducing Looking Glass Media Encoder, a powerful new web tool designed to streamline the process of encoding and displaying holographic video and images for Looking Glass Go and Portrait.

For creators and professionals using Looking Glass displays, having a seamless way to load and playback 3D media is essential. Here's the Looking Glass Media Encoder in action.

👋 Hi I’m Bryan. I’m the Developer Experience Lead at Looking Glass. We’re sharing an early look at Looking Glass Media Encoder and are excited to hear your feedback!

Video has become a pretty ubiquitous part of our lives, whether from the old family video recorder or the latest smart phones, video can allow us to retain some of our most cherished memories. This blog post will take you behind the scenes of going from a video file to a Looking Glass display, and how we’re using the web to power it all.

This blog post assumes that you understand basic programming logic and computer terminology, if you’re still new to development we’d recommend checking out how Looking Glass displays work or browsing through our documentation pages.

Holographic Media

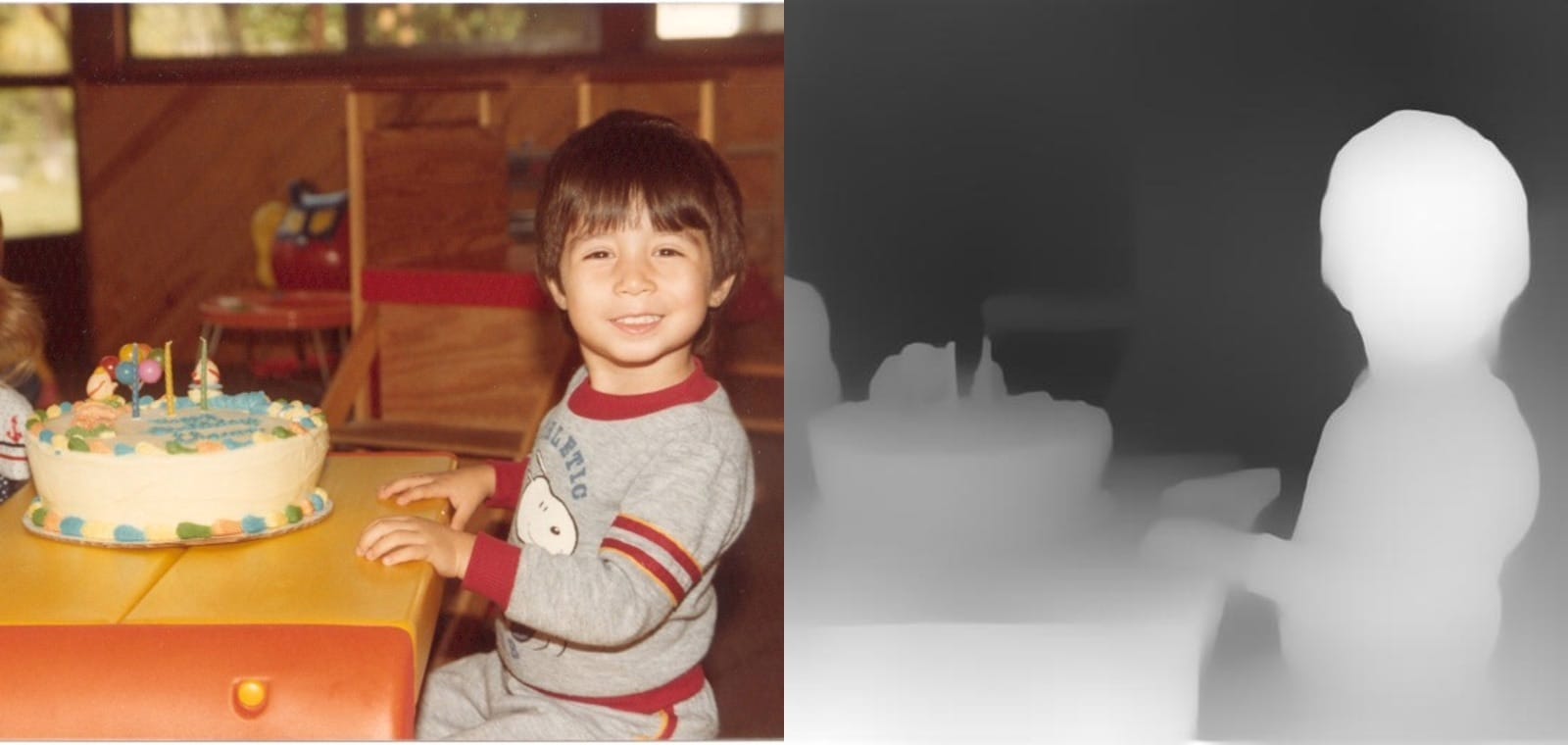

Currently there are two main kinds of video formats that we use to encode 3D data, Quilts and RGB-D. Quilts are similar to sprite sheets or flip books, each frame contains 45 (or more) views that represent the different perspectives you see when you look at Looking Glass display. RGB-D is a different type of representation, where the video is split in half, with one side being color, and the other being a depth map, which represents the color data’s 3D position along a single axis.

Both of these methods require running through a graphics pipeline before ending up on a Looking Glass display, this is to ensure that each pixel gets accurately mapped to the optical element on the display.

Example of a quilt by Jane Guan (left) and an example of an RGB-D image of Looking Glass CEO and Co-founder, Shawn Frayne (right)

Video itself can be rather complex, especially once you start getting to higher or extreme resolutions like the kind we need here at Looking Glass. Quilt videos typically start at 4096 x 4096 and go up to 8192 x 8192. To put that in perspective, it’s about 2 to 8 times higher resolution than a typical 4k (3840 x 2160) video. RGB-D video is always double the width of the input video, so a 1920 x 1080 video becomes 3840 x 1080.

Creating an efficient video-based graphics process is quite complex, requiring careful resource management. Given the high resolution of the video(s), inefficient use of video frames can lead to severe performance issues - or worse, cause significant lag on the user's computer.

Before we jump into all the technical details there are a few terms we’ll want to go over, because, after all, video can get quite deep and quickly becomes pretty complex!

To peel back some layers, video typically has a few key factors:

- codec - the algorithm used to compress and optimize the video for playback, common codecs are H265, H264, VP9, VP8, and AV1

- colorspace - the amount of data saved into each pixel

- resolution - the size of the video in width/height

- videoFrame - an individual frame of video

- audioChunk - a collection of audio data that we can decode

- syncing - our term for taking an input video (quilt or RGB-D) and outputting an encoded video for a Looking Glass display.

Hardware, computers, and embedded systems

One important thing to note is that not all computers can play back every kind of video. Apple’s latest chips lack hardware acceleration support for VP8/VP9, and codecs like H264 don’t support the higher resolutions that we need. In these cases, computers will usually fallback to software decoding, using the CPU rather than the GPU, but this can cause a handful of issues including the dreaded memory copy.

Embedded Systems

This gets even more complex when it comes to the embedded processors that run in the Looking Glass Go and Looking Glass Portrait (no longer available). Modern embedded processors typically will only play back video at a maximum size of 3840x2160but often lack full colorspace playback support and have limited codec compatibility.

Because the processors embedded in the Go and Portrait are equipped with specialized media engines, we need to encode the video to H265 directly in order to playback 4K footage.

In addition to technical constraints, there are also some less commonly known aspects of video. Most display technologies today — whether they are LCD or OLED — are made of red, green, and blue pixels (RGB). This wasn’t always the case though, and, for a variety of reasons (including broadcast television standards), video uses YUV instead, which is a luminance-based standard for efficiently encoding images.

While modern computers typically have GPUs with specialized video decode and encode portions to allow us to efficiently playback and encode video, embedded systems often lack this functionality. Instead, embedded systems usually include a separate specialized display engine which allows for transforming YUV data directly to the display itself. This is especially crucial as embedded processors tend to lack resources to perform colorspace conversion (YUV → RGB) on top of the work they’re already doing to decode the video frames.

Video Encoding

In order for the Portrait and the Go to playback video in stand-alone mode, we need to encode the pre-rendered content directly to H265/HEVC, which their embedded processors can playback at sufficient rates.

In Looking Glass Studio, we used an open-source library called libkvazaar by ultravideo which allowed us to encode h265 video on the CPU. This was necessary at the time because hardware accelerated h265 playback wasn’t common across all the platforms we supported.

The downside of an approach like this is that it’s a software encoder, meaning it uses the CPU to encode frames. In order for us to encode a video in Looking Glass Studio we had to decode a frame, copy that data to the GPU, render it, then copy it back to the CPU for encoding. These copies typically add anywhere from 15ms to 33ms to a single frame. Over the course of even a short video, these delays add up to minutes of processing time.

Over the past few years, we’ve seen an increase in adoption of hardware acceleration for h265 encoding across multiple GPU vendors, including AMD, Nvidia, Intel, and Apple Silicon. Normally, accessing hardware acceleration on these platforms requires using vendor specific libraries.

Where does the web fit in? 🌐

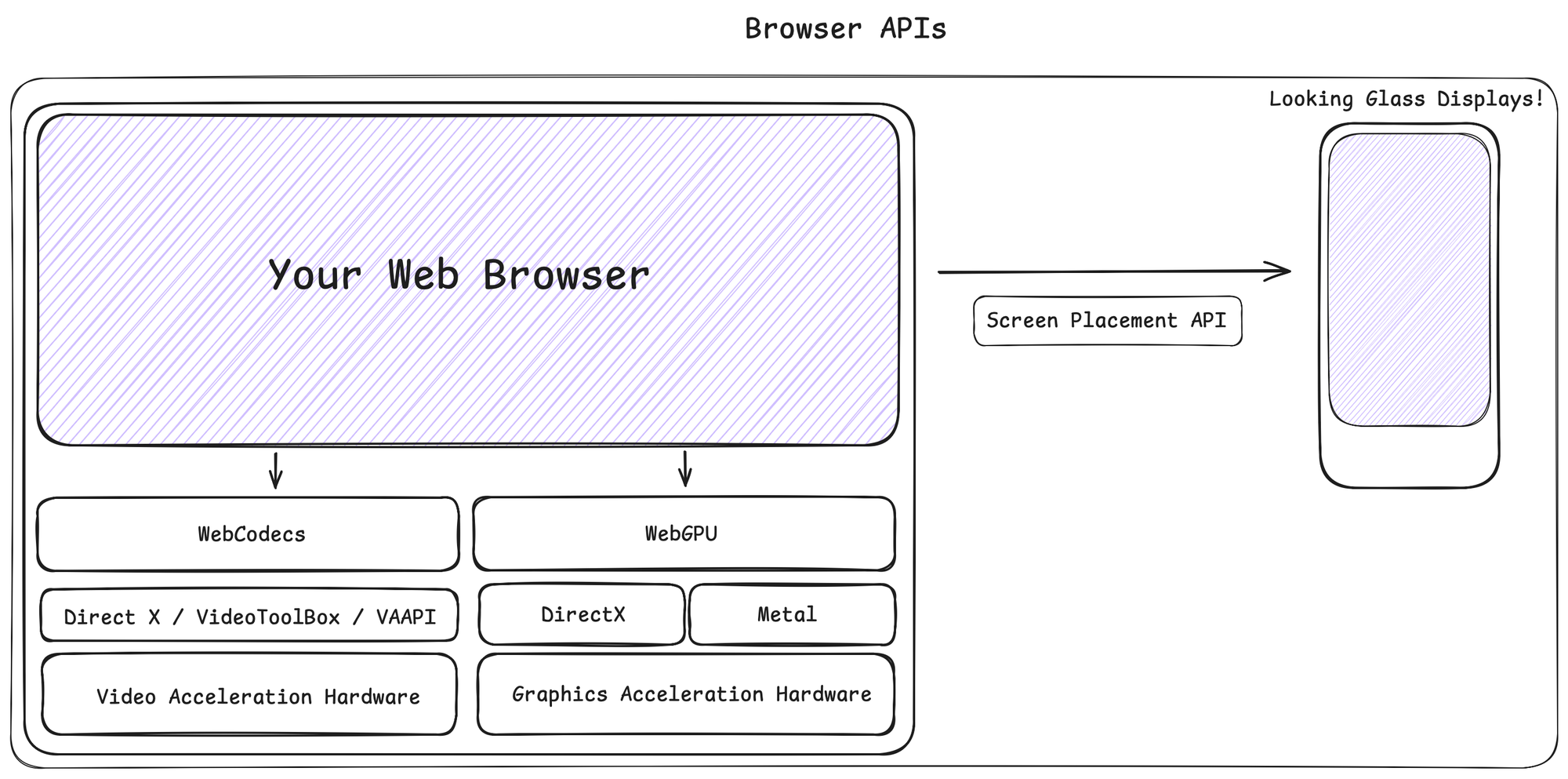

Now that we’ve covered the video hardware, let’s bring this back to the web. Web browsers have been increasing support for specialized hardware with APIs like WebGPU and WebCodecs. These APIs allow us to access the computer’s graphics and video acceleration hardware and have one application which can run across multiple operating systems, including MacOS and Windows.

Web browsers allow us to target a single layer of abstraction that provides an interface to the computer’s hardware. In Chrome, FFmpeg is used along with the computer’s graphics driver to decode frames with the GPU and keep them accessible for use in WebGPU.

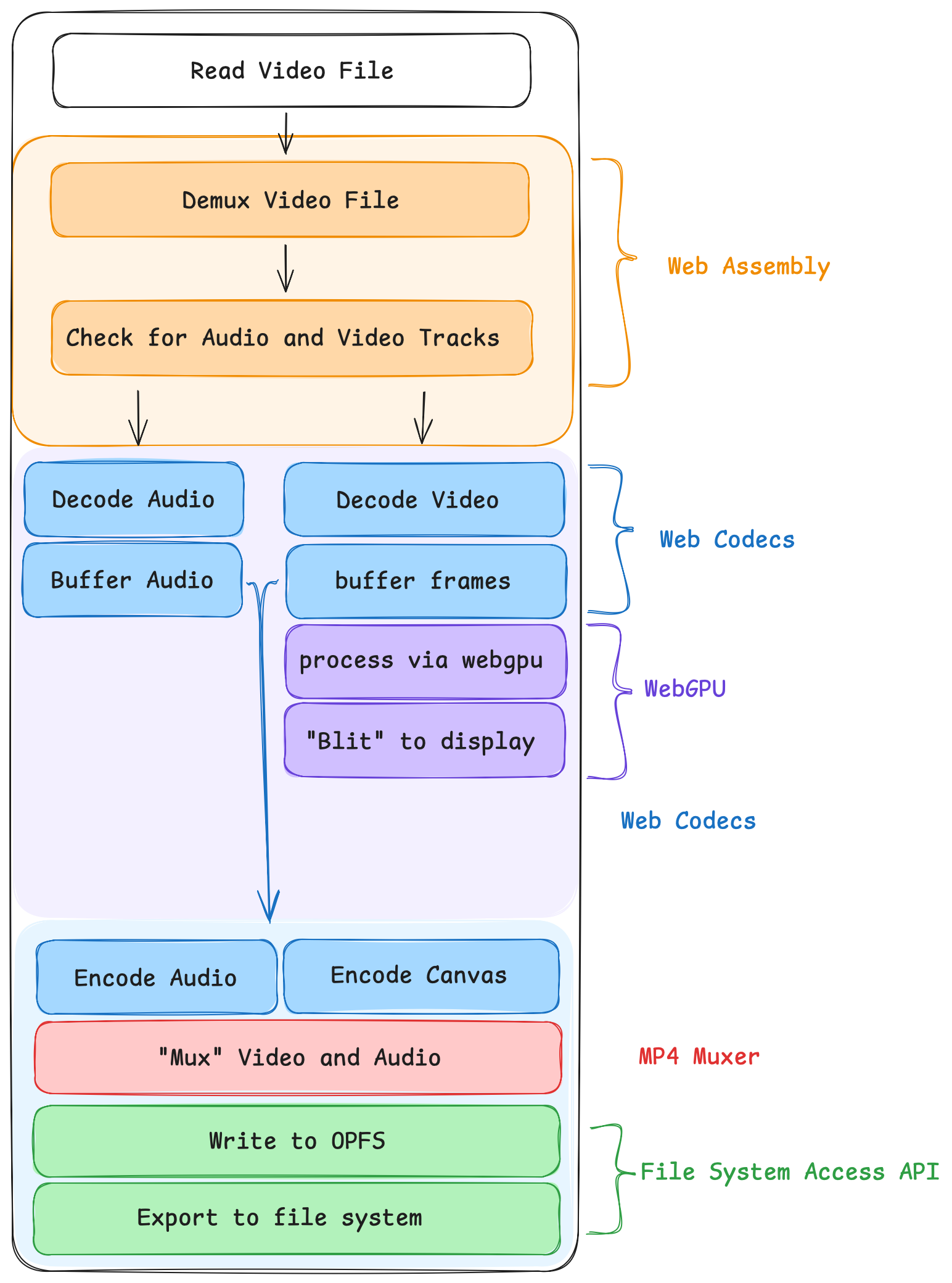

While the WebCodecs API allows us to decode a video directly, there’s still no browser API for reading video files or writing them. To do that, we need to use other libraries and, for the Portrait and Go, write a new video for playback in Standalone mode.

Muxing refers to the process of combining multiple input streams into a single output. Demuxing is the opposite, taking a single input stream and breaking it out into its component parts.

To read videos, we utilize an open-source library built on FFmpeg called web-demuxer. To write videos, we utilize MP4Muxer. These two libraries allow us to read a variety of input videos such as .mp4 and .webm while also exporting videos specifically for the Go and Portrait.

So the full pipeline for what we need is as follows:

There’s a lot of steps here that could have halted this experiment — a single copy operation anywhere in the chain would’ve taken our results from real-time to around 1 frame per second. We’re incredibly grateful for the W3C’s focus on zero copy on the Web for enabling this workflow.

Graphics

For our case we need to support two types of media formats — Quilts and RGB-D. For quilts, we need to take the input file and pass it directly through a shader to place all the views exactly down to the subpixel on the Looking Glass Display. For RGB-D, we need to run a full 3D pipeline to render a mesh, render each view, and then pull those resulting views into our displays. For both pipelines, having video frames that we can directly pass into WebGPU is crucial.

Playback

Playing back a video in browser with WebCodecs gives us a few advantages. Because the videos we’re playing are so large, we can leverage WebWorkers to move work off the main thread, allowing the rest of the browser to remain performant.

One of the downsides to this amount of control is that we need to reimplement features such as synchronizing video with audio, looping a video, or transitioning through a playlist of videos. All of these cases need to be considered since at the root we’re working with individual videoFrames and audioChunks.

Using these improperly could lead to cases where we run out of memory, utilize too much of the CPU, or both! In order to ensure that audio and video are synced during playback, we need to use a ringBuffer, which is a coding pattern that allows for a continuous buffer of data to be written, read, and overwritten again.

While developing we found a lot of edge cases, especially when driving the VideoDecoder to playback in line with audio. The WebCodec team’s audio-video player example was a good guide and, with a handful of modifications, we were able to get our player working with audio and video simultaneously!

Encoding

For our particular technology, the main benefit and strength of WebCodecs comes from its interop with WebGPU. This allows us to use videoFrames while keeping all the data on the GPU for the whole process, including decode, display, and encode. We can not only take the videoFrame into WebGPU, but we’re able to take a canvas and directly encode the data — this gives us an incredible speed improvement!

This results in massively sped up encoding times, allowing users like you to sync content much faster than in our previous solutions.

Additional APIs

In addition to WebCodecs and WebGPU, we’re also using a handful of other APIs to make this experiment possible. The ScreenPlacement API allows us to open a window directly on a connected Looking Glass display (though it doesn’t support going directly to full screen yet), and the browser’s nativeFileSystemAccess API allows us to write the file to disk directly while we’re encoding it. This saves us a ton of computing resources as we don’t have to hold the video in memory while we’re encoding.

Both WebCodecs and WebGPU are relatively new APIs but we’re already seeing strong cross-browser support, Looking Glass Media Encoder is compatible with the latest version of Google Chrome on either a Windows or Mac computer. We’ve also tested an earlier version with Safari Tech Preview with WebGPU and WebCodecs enabled and are pleased to see similar results in performance.

Try Looking Glass Media Encoder

Looking Glass Media Encoder is now available in early access - ready for you to explore!

Get started📖 View the user guide and documentation here.

Don't forget, you can join our community to share feedback, connect with other creators and developers, and get early access to our latest features.

Thank you for checking us out today, and as always,