Depth Maps: How Software Encodes 3D Space

Depth maps contain information about the distance of objects from a specific perspective or reference point (like a camera lens). Each pixel is assigned a value to represent the distance of that pixel from the reference point which creates a 3D representation of the scene for its RGB image or virtual scene.

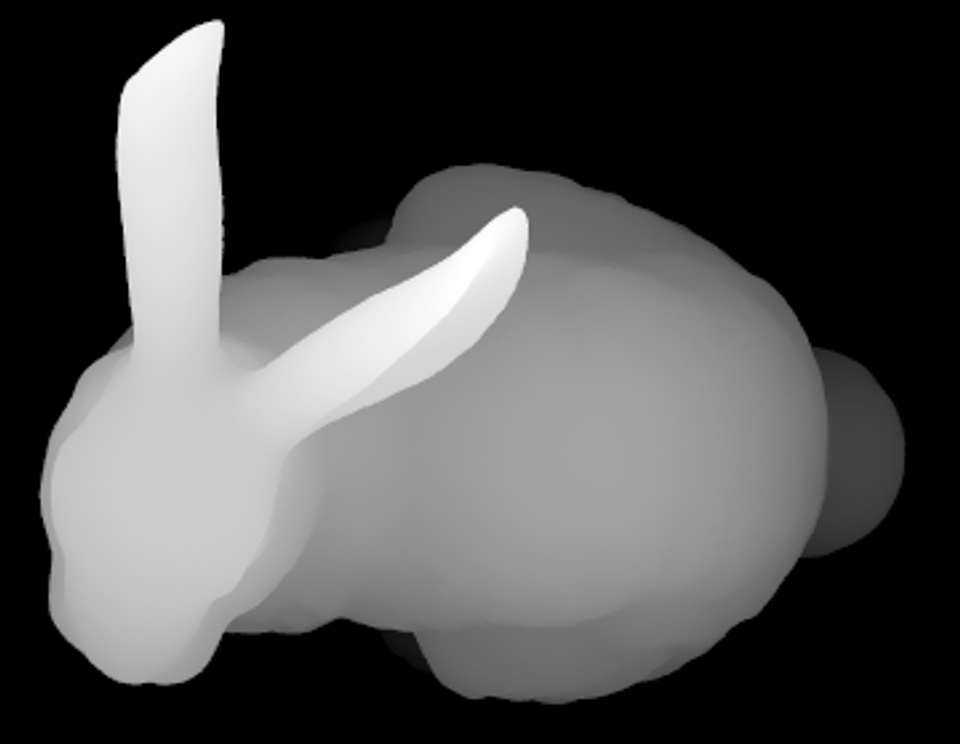

Take a look at the image of the Stanford Bunny above. The white pixels represent the part of the scene that is closest to the camera lens, and the black pixels represent the part of the scene that is furthest. In this case the part of the scene that is closest are the ears of the bunny. The grayscale gradient in between illustrates that the head, neck, and body are a bit further from the camera, the legs even further, and the tail of the bunny the furthest before the background, or furthest point of the image.

This is a depth map.

Depth maps don’t have a set standard - that is to say, there are some depth maps in which the closest point to the camera lens is black or some depth maps that have a RGB scale instead of a grayscale logic. So keep an open mind here.

If you’re like me, then a simple definition of what something is doesn’t cut it. I need to know why it’s needed and how it’s applied in order to digest a concept fully and integrate it into my understanding of the real and virtual world. So without further ado:

Why do we need depth maps?

There are a few reasons we rely on depth maps when we communicate objects in 3D space. For the sake of comprehension, I’ll focus on what I believe the most important one: to tell software where objects are in Z space. Being able to see depth and understand how close and far objects are is the result of being a human being with stereoscopic vision.

When we look at a photo like this one below, we intuitively understand a number of things:

The path at the bottom is the closest to the lens, followed by my friends ahead of me, with the landscape of the Grand Canyon stretched out to the back of the frame.

We agree upon this because you and I live in the real world and are accustomed to viewing in depth with the same stereoscopic vision. However in essence, and especially to a computer, this is just a bunch of different colored pixels arranged on the XY axis in an image of a specific size on a screen.

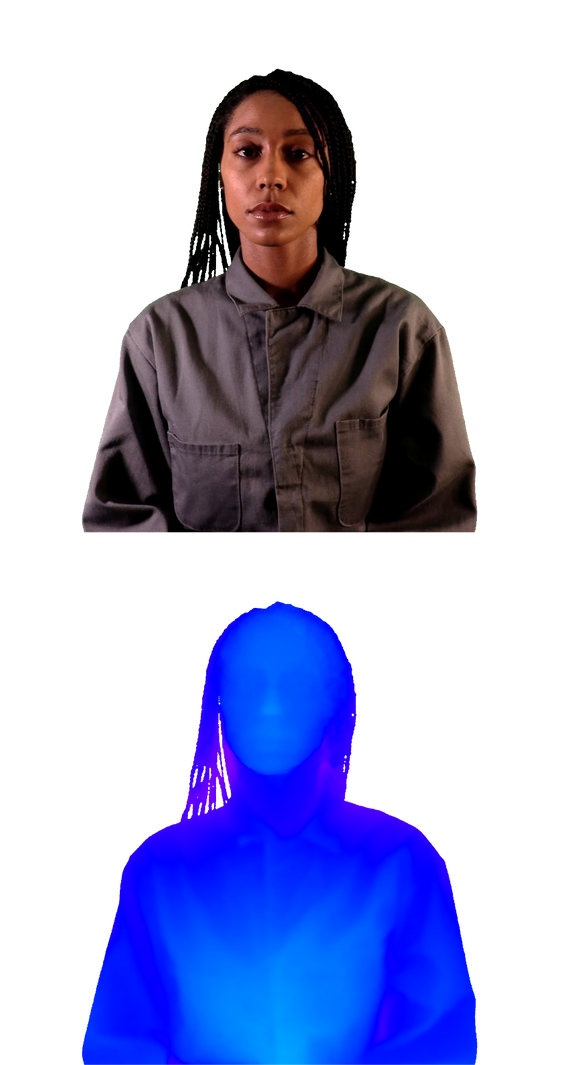

So for the computer to see the depth that we see, this is created:

This is depth information about the same image above, but the pixels that are the closest to the camera are assigned a value that translates to white in the depth map and the pixels that are furthest from the camera are assigned a value that translates to black, with a grayscale depicting the distance moving from the object and floor in the front of the scene to the back of the scene.

I guess the joke I like to tell is that this is probably how Agent Smith’s great great grandfather saw the matrix when it first booted up.

I’m hilarious.

Okay, but still, why do we what need this?

We need the computer to understand this for a few applications.

Realism in Virtual Scenes

One is realistic lighting in 3D renderings. Remember Doom?

Great game. But I can also point to it as an example of a 3D game that didn’t have a lot of Z space data to work with in order to render realistic lighting.

You can see this gameplay still from Call of Duty: Modern Warfare as a good comparison of what we were working with in the 90s versus what we’re working with today.

The way light and shadow casts on objects also depends on its distance from the reference point or the (virtual) camera lens. As objects get further away, they are obstructed by more fog and change in color. And this translates to a more realistic feeling render of a 3D game, because we live in a world of light and shadow (not flat colors, though that could be cool).

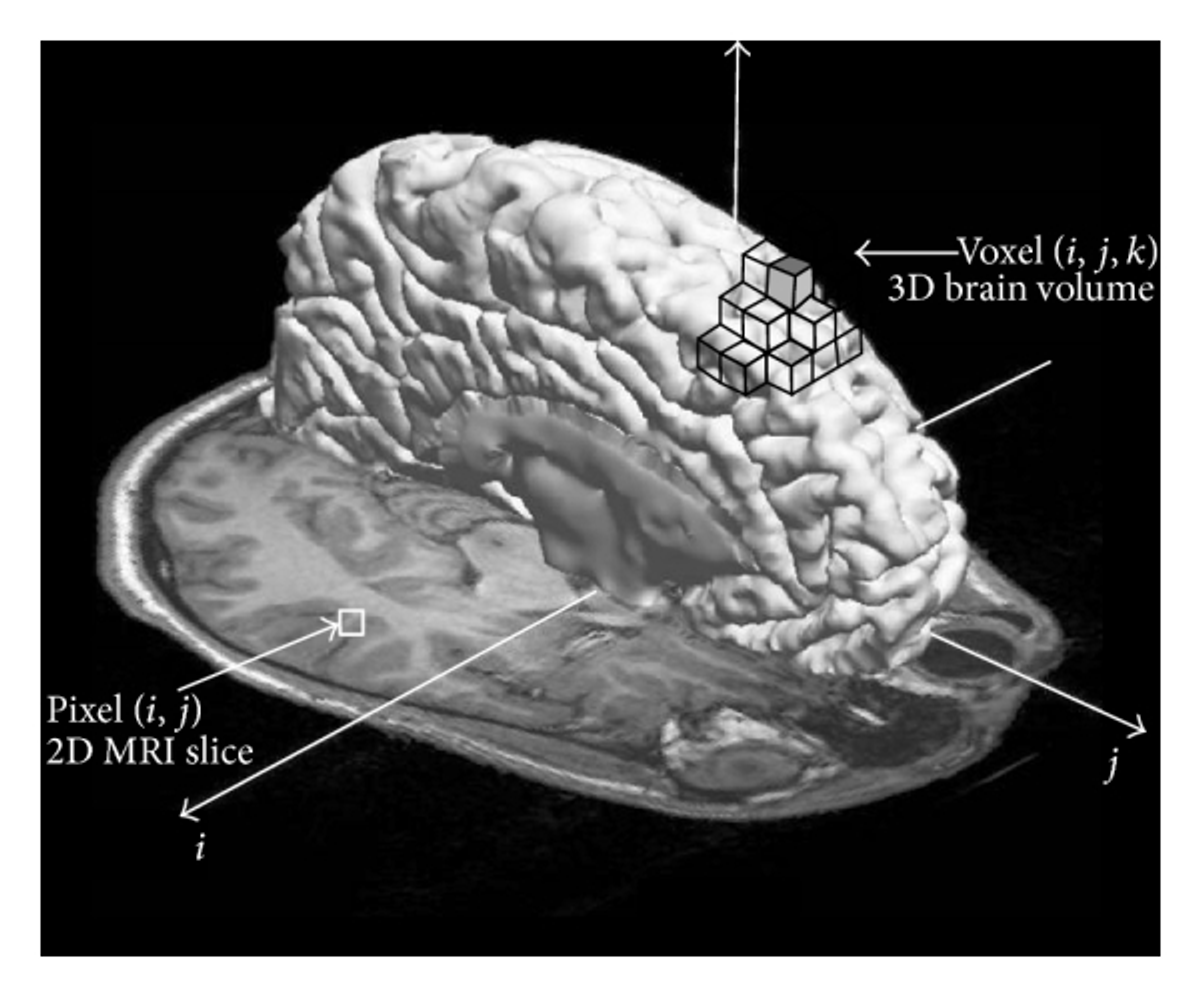

Medical Imaging

Depth maps are also used in medical imaging to create 3D models of the human body. A depth map is rendered by taking multiple images of the human body (like CT or MRI scans) and calculating the distance from each pixel to the camera through software. The model generated from this depth map can be used for diagnosis and planning surgery for the patient.

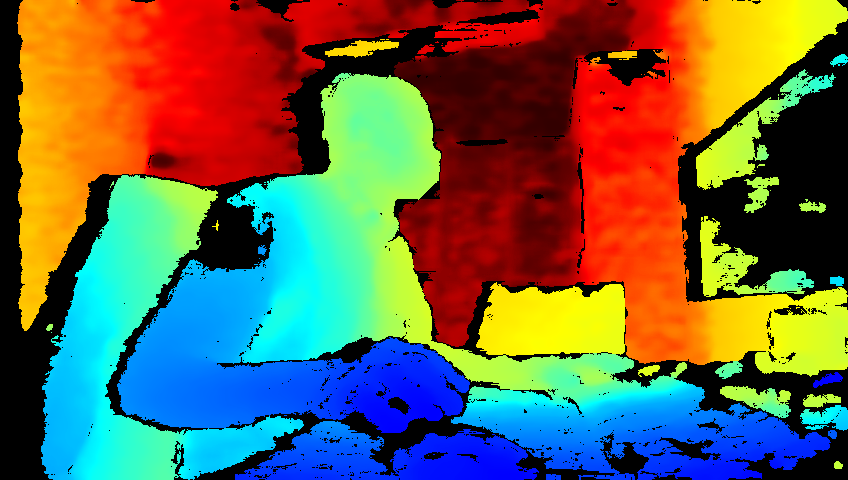

Car and Robot Vision

Did you ever think about how cars can automatically drive? How can they see the road? Turns out, through a combination of LiDAR and depth imaging technology. They use stereo cameras, LiDAR depth sensors, or a combination of both to generate depth maps and detect other objects and their distance from the car (like other cars, people, poles, etc).

Robots also can see thanks to depth imaging (remember Grandpa Agent Smith?)- using depth maps to generate 3D models of their environment and support the recognition of specific objects. If you’re interested in teaching your robot to see, check out this tutorial that uses the OAK-D Lite, an AI powered depth camera!

How does this apply to me?

Smartphone depth maps

Well if you use a smartphone, chances are high that you’re interacting with depth maps already.

iPhones and Androids with Portrait features use the depth map to isolate the subject from the background and change the depth of field or lighting on the subject.

There are also apps like Focos, DepthCam, and Record3D, which allow you to take photos you’ve already captured, capture live photo/video with depth maps, and see the images in 3D on your phone. Record3D actually has a Looking Glass export option that allows you to pull RGB-D video taken from the app straight into Looking Glass Studio to view in 3D.

A couple years back I wrote a blog post on extracting depth maps from IPhone Portrait Mode photos, using Exiftool (it's a little out of date but still interesting to check out). Back then, I would extract out the depth map to clean it up and combine it as an RGB-D image to pull into the Looking Glass Display. But we’ll get to more of that below.

Facebook 3D Photos

In 2018, Facebook rolled out a cool new feature that allows users to upload a Portrait Mode photos (Android or iPhone) or RGB-D photos as 3D. The photo is responsive to the gyroscope in your phone, and tilts in 3D as you move your phone.

Looking Glass display

With a Looking Glass, you can pull in RGB-D images in to view as real 3D on sight.

Same image rendered in real 3D in the Looking Glass

Even though depth maps are super useful for computers and software applications to understand 3D space - it’s still hard for humans to view this information. As you saw above in the IOS apps that deal with 3D data, you can (at the most) use your finger to rotate the resulting 3D image to view at different angles.

There are ways to render out a depth map into a point cloud and pull it into a 3D software engine like Blender or a game engine like Unity, but you're still limited to experiencing your 3D image by panning around and rotating the scene view with your mouse.

In a Looking Glass you’re actually able to use the 3D data in a visual way. Its software takes the depth map, creates a mesh for the RGB-D image, and stretches that mesh to be viewable in real 3D. The same data

In fact, Looking Glass has a built in Image to Hologram conversions on Blocks, which allows you to take any image or photo in PNG or JPG format and convert it into an RGB-D image. Photos that your grandfather took, photos on your iPhone, works of art you love, 3D renders, or AI generated images all become 3D holograms with this simple conversion tool.

In a Looking Glass you’re actually able to use the 3D data in a visual way. Its software takes the depth map, creates a mesh for the RGB-D image, and stretches that mesh to be viewable in real 3D. The same data

To learn how, follow the tutorial linked below.